Computational Light Laboratory and its collaborators contribute to ACM SIGGRAPH Asia 2024 with innovative computational display and lensless camera research¶

Written by Kaan Akşit, 15 November 2024

ACM SIGGRAPH Asia 2024 continues to serve as one of the leading venues in computer graphics and interactive techniques related research. This year, the conference is taking place between 3rd and 6th of December in Tokyo, Japan. We, members of Computational Light Laboratory (CLL) and its global research collaborators, are contributing to ACM SIGGRAPH Asia 2024 with our cutting edge research. Our work continues to deliver innovations in emerging fields including computational displays and lensless cameras. This document describes a brief introduction to our work presented at the conference.

Propagating light to arbitrary shaped surfaces¶

Focal Surface Holographic Light Transport using Learned Spatially Adaptive Convolutions

Presented by: Chuanjun Zheng and Yicheng Zhan

Location: G510, G Block, Level 5, Tokyo International Forum, Tokyo, Japan

Time: 11:27 am and 11:40 am JST, Wednesday 4 December 2024

Session: Mixed Reality & Holography

Classical computer graphics work with rays to render 3D scenes, largely avoiding wave characteristics of light. In the recent years, treating light as waves in rendering pipelines has gained a strong attention with increasing interest in holographic displays and improving physical realism in computer graphics.

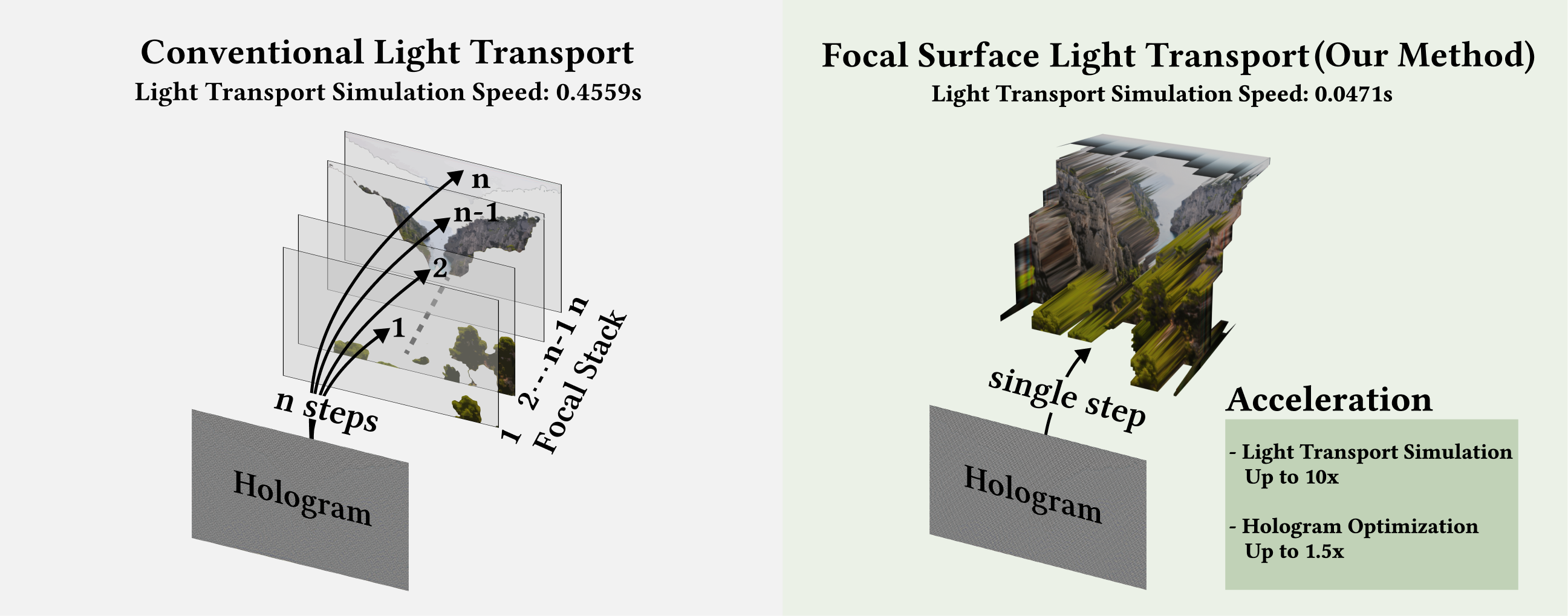

Chuanjun Zheng has spearheaded a new wave-based rendering method for rendering light beams emerging from a plane landing on arbitrary shaped surfaces. Previous literature on wave-based rendering typically rely on planes to propagate light in 3D, which would require multiple computational steps as it would slice a 3D scene into many planes.

Our work enables a new way to overcome this computational complexity arising from plane to plane treatment, and unlocks a new rendering method that could propagate light beams from a plane to a focal surface. This new model could help reduce computational complexity in simulating light. Specifically, it could help verify and calculate holograms for holographic displays with much ease and lesser computation.

Chuanjun Zheng has conducted this major effort as our remote intern, showing an examplery scientific rigor and motivation. The work is also conducted in collaboration with various partners from industry and academia, including Yicheng Zhan (UCL), Liang Shi (MIT), Ozan Cakmakci (Google) and Kaan Akşit (UCL). For more technical details including manuscript, supplementary materials, examples and codebase please visit the project website.

Precisely detecting orientation of objects remotely in micron scale¶

SpecTrack: Learned Multi-Rotation Tracking via Speckle Imaging

Presented by: Ziyang Chen

Location: Lobby Gallery (1) & (2), G Block, Level B1, Tokyo International Forum, Tokyo, Japan

Time: 09:00 am and 06:00 pm JST, Tuesday, 3 December 2024

Session: Poster

Tracking objects and people using cameras is a commonplace in computer vision and interactive techniques related research. The accuracy in terms of identifying spatial location of objects are limited in vision-based methods. The vision-based methods provide an accuracy in the ball park of several centimeters.

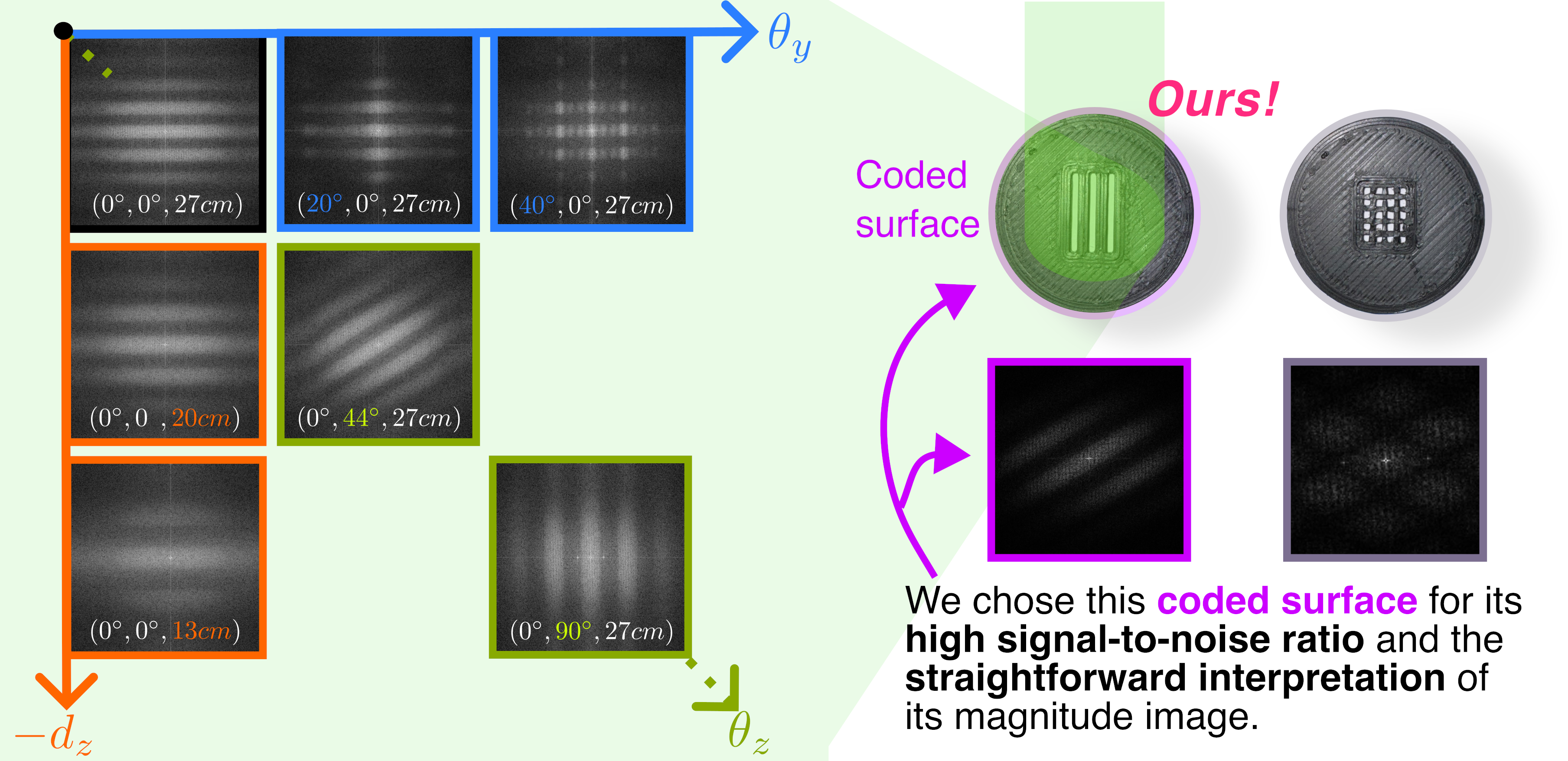

Ziyang Chen built a hardware setup that contains a lensless camera, a laser and a controllable stage to investigate on speckle imaging as an alternative to vision based systems in tracking of objects. Previous literature demonstrated that speckle imaging could provide micron-scale tracking ability. However, the literature was missing a technique to precisely detect orientation of objects in a 3D scene using speckle imaging. Our work addressed this gap in the literature by proposing a learned method that could precisely detect the orientation of objects in a 3D scene using speckle imaging. This innovative approach relies on our light-weight learned method and clever usage of coded apertures on objects.

Ziyang Chen, coming from computer science background, has swiftly adapted to the challenges in hardware research by building a bench-top optical setup in our laboratory space, and he managed to couple that with artificial intelligence through his learned model. Ziyang Chen conducted this research both as a part of his master thesis and his first year Ph.D. studies at UCL in the passing year and a half. Ziyang Chen collaborated with various industrial and academic researchers in this project, including Doğa Doğan (Adobe), Josef Spjut (NVIDIA), and Kaan Akşit (UCL). For more technical details including manuscript, supplementary materials, examples and codebase please visit the project website.

Public outcomes¶

We release codebases for each project at their project websites (Focal Surface Holography and SpecTrack) for other researchers and developers to be able to replicate and compare their development with ours in the future. These codebases use modern Python programming language libraries, and our toolkit, Odak. We have integrated our models to Odak as:

These model classes also have their unit tests avaible in:

test/test_learn_wave_focal_surface_light_propagation.py,test/test_learn_lensless_models_spec_track.py.

These models are also shipped with the new version of odak==0.2.6 and readily available to install using pip install odak.

To learn more about how to install odak, visit our README.md.

Photo gallery¶

Here, we release photographs from our visit to the conference, highlighting parts of our SIGGRAPH Asia experience.

Outreach¶

We host a Slack group with more than 250 members. This Slack group focuses on the topics of rendering, perception, displays and cameras. The group is open to public and you can become a member by following this link.

Contact Us¶

Warning

Please reach us through email to provide your feedback and comments.