AutoColor: Learned Light Power Control for Multi-Color Holograms

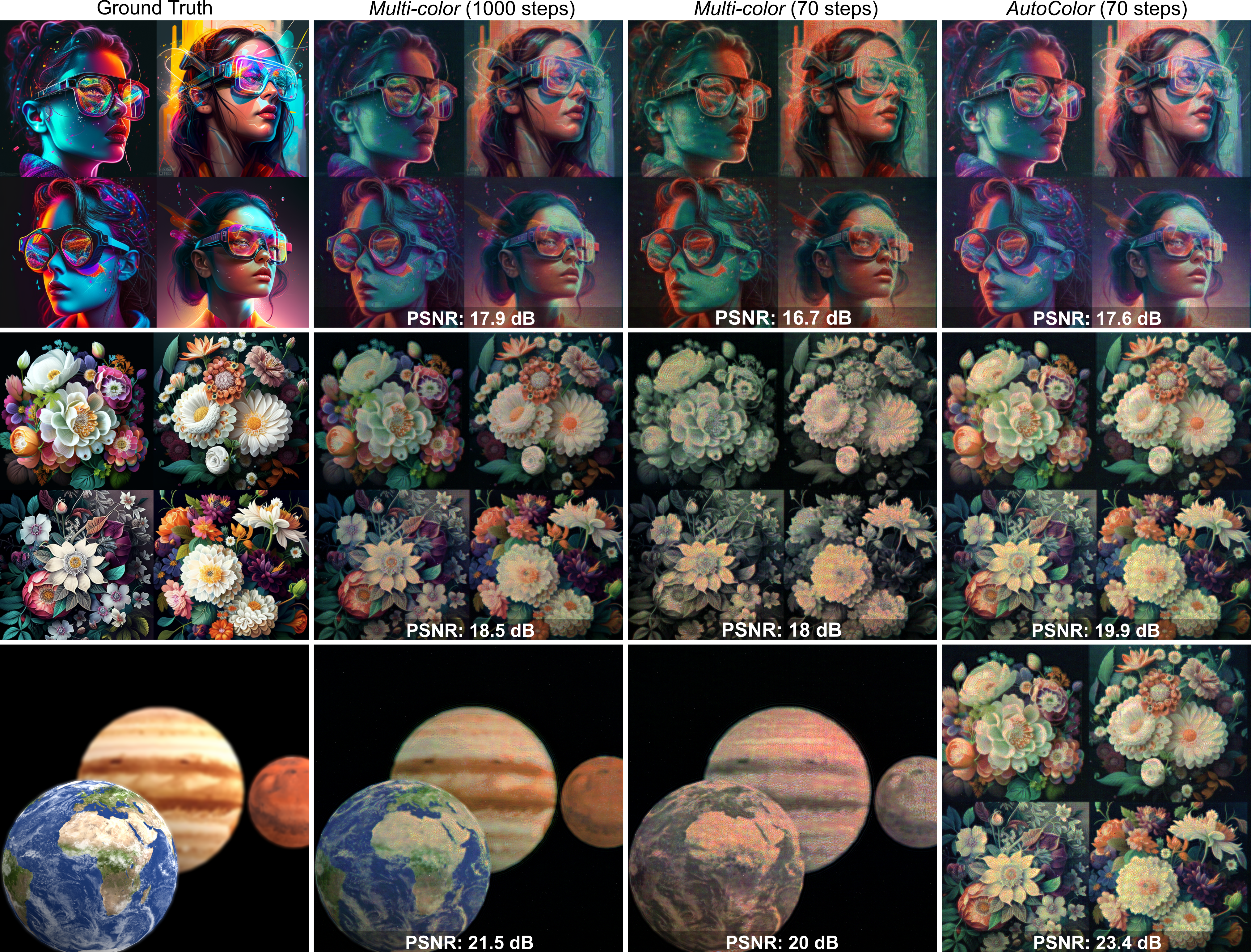

Multi-color holograms rely on simultaneous illumination from multiple light sources. These multi-color holograms could utilize light sources better than conventional single-color holograms and can improve the dynamic range of holographic displays. In this letter, we introduce AutoColor, the first learned method for estimating the optimal light source powers required for illuminating multi-color holograms. For this purpose, we establish the first multi-color hologram dataset using synthetic images and their depth information. We generate these synthetic images using a trending pipeline combining generative, large language, and monocular depth estimation models. Finally, we train our learned model using our dataset and experimentally demonstrate that AutoColor significantly decreases the number of steps required to optimize multi-color holograms from > 1000 to 70 iteration steps without compromising image quality.

By using the Large Language Model (LLM) called GPT-4 via its online interface ChatGPT, we generate a series of detailed prompts with different keywords. We used a set of prompts to guide the generation process and developed a large dataset of images locally using text-to-image generation models and a super-resolution network. We estimated the depth information for the generated images using a monocular depth information network and optimized multi-color holograms and their light source powers using a Multi-color optimization pipeline. The entire dataset generation process was computationally intensive and took about ten days using multiple GPUs.

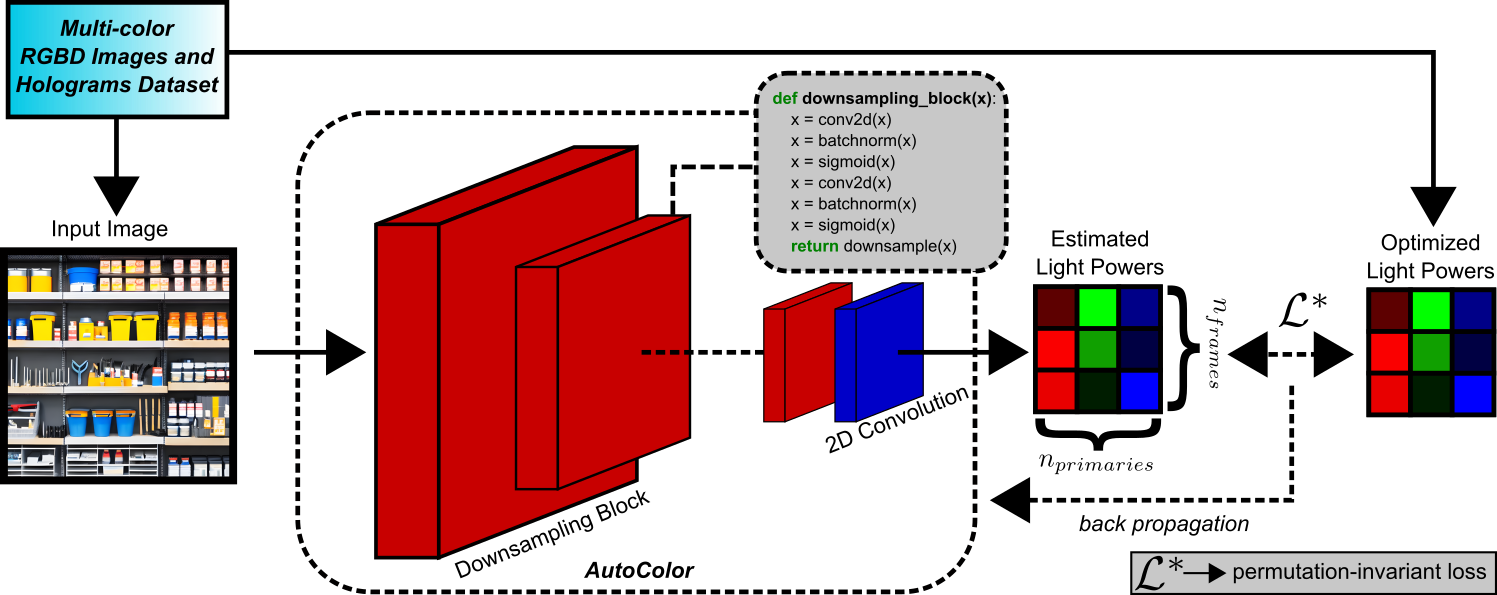

We used Multi-color to generate multi-color holography and uses a CNN to estimate light source power from input images. The estimated light source powers are then used to optimize multi-color holograms using an existing pipeline. We validate the approach experimentally and show that AutoColor can significantly improve computational efficiency by reducing the optimization steps. We also developed a holographic display hardware prototype for quantitative evaluation and summarize our quantitative evaluation in below.

AutoColor Overview

We demonstrate that this approach requires significantly fewer steps and can lead to holograms with wide dynamic ranges at interactive rates. The approach is validated through experimental analysis, and we suggest that AutoColor represents a promising research frontier for future hologram development.

Quantitative Evaluation

Kaan Akşit, Koray Kavaklı and Yicheng Zhan are supported by the Royal Society’s RGS/R2/212229 Research Grants 2021 Round 2 and Meta Reality Labs inclusive rendering initiative 2022.

Hakan Urey is supported by the European Innovation Council’s HORIZON-EIC-2021-TRANSITION-CHALLENGES program Grant Number 101057672 and Tübitak’s 2247-A National Lead Researchers Program, Project Number 120C145.

Qi Sun is partially supported by the National Science Foundation (NSF) research grants #2225861 and #2232817.

@article{zhan2024autocolor,

title={AutoColor: Learned Light Power Control for Multi-Color Holograms},

author={Zhan, Yicheng and Kavakl{\i}, Koray and Urey, Hakan and Sun, Qi and Akşit, Kaan},

journal={SPIE},

year={2024},

month=Jan,

arxiv={2305.01611},

html={https://complightlab.com/autocolor_},

code={https://github.com/complight/autocolor},

doi={https://doi.org/10.48550/arXiv.2305.01611}

}