ChromaCorrect: Prescription Correction in Virtual Reality Headsets through Perceptual Guidance

Ahmet H. Güzel*1 | Jeanne Beyazian2 | Praneeth Chakravarthula3 | Kaan Akşit*2

1University of Leeds, 3Princeton University, 2University College London, *Corresponding Authors

UCL Computational Light Laboratory

UCL Computational Light Laboratory

Biomedical Optics Express 2023

Abstract

A large portion of today’s world population suffer from vision impairments and wear prescription eyeglasses. However, eyeglasses causes additional bulk and discomfort when used with augmented and virtual reality headsets, thereby negatively impacting the viewer’s visual experience. In this work, we remedy the usage of prescription eyeglasses in Virtual Reality (VR) headsets by shifting the optical complexity completely into software and propose a prescriptionaware rendering approach for providing sharper and immersive VR imagery. To this end, we develop a differentiable display and visual perception model encapsulating display-specific parameters, color and visual acuity of human visual system and the user-specific refractive errors. Using this differentiable visual perception model, we optimize the rendered imagery in the display using stochastic gradient-descent solvers. This way, we provide prescription glassesfree sharper images for a person with vision impairments. We evaluate our approach on various displays, including desktops and VR headsets, and show significant quality and contrast improvements for users with vision impairments.

Optimization Pipeline

Step 1:

A screen with color primaries (RGB) displays an input image.

Step 2:

A viewer’s eye images the displayed image onto the retina with a unique Point Spread Function (PSF) describing the optical aberrations of that person’s eye.

Step 3:

Retinal cells convert the aberrated RGB image to a trichromat sensation, also known as Long-Medium-Short (LMS) cone perception

Step 4:

Our optimization pipeline relies on the perceptually guided model described in previous steps (1-3). Thus, the optimization pipeline converts a given RGB image to LMS space at each optimization step while accounting for the PSFs of a viewer modelled using Zernike polynomials.

Step 5:

Our loss function penalizes the simulated image derived from the perceptually guided model against a target image in LMS space. Finally, our differentiable optimization pipeline identifies proper input RGB images using a Stochastic Gradient Descent solver.

Evaluation

1) Hardware Setup

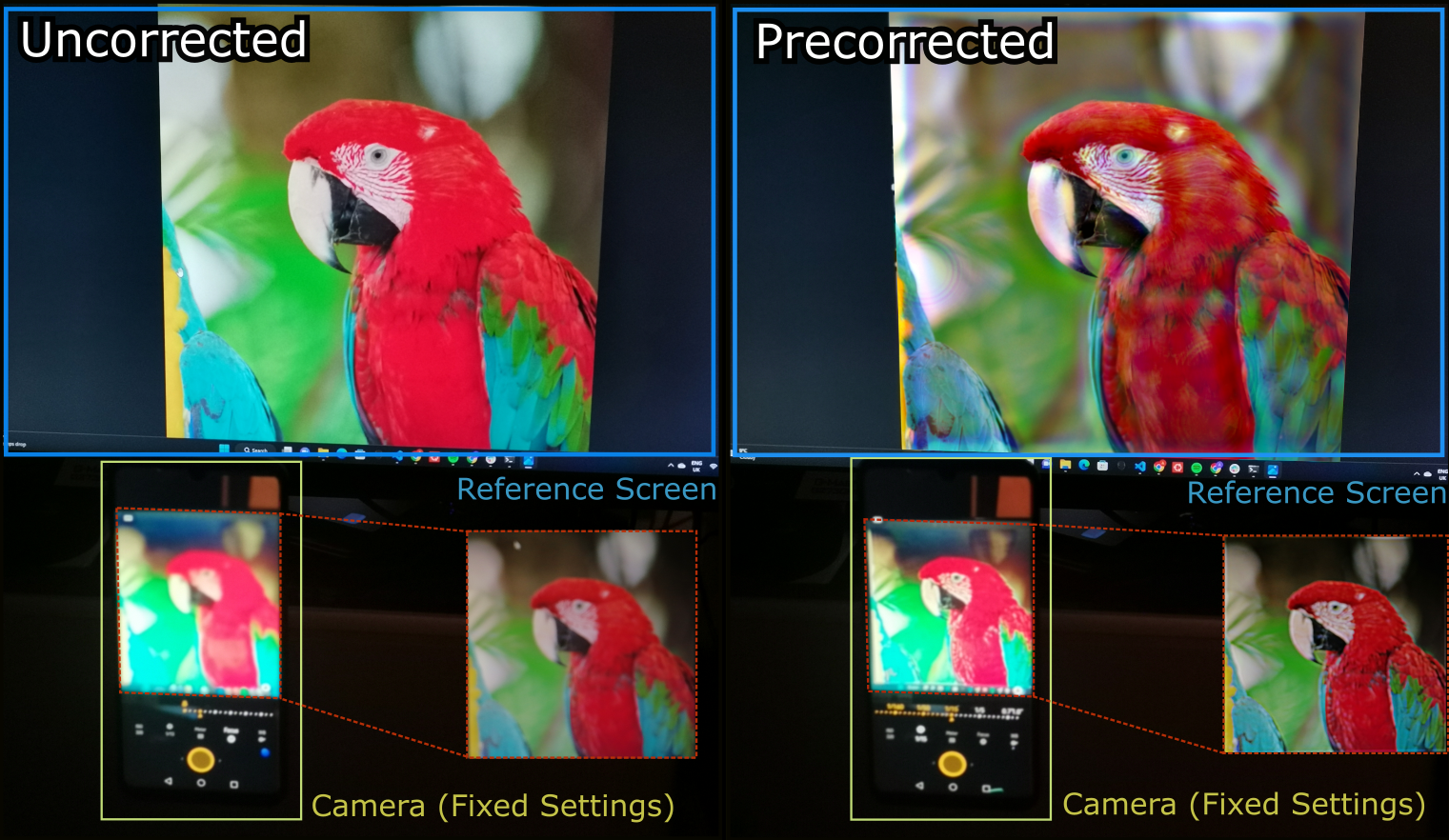

1.a) Conventional Display

For every experimental image capture, we fixed the pose, ISO, and focus setting of the camera to ensure a consistent view with a nearsighted prescription of -1.50.

1.b) Virtual Reality Headset

(A) We use a virtual reality headset and a camera to capture images from our virtual reality headset. To emulate a prescription problem in the visual system, we use a defocus lens. (B) We take pictures with fixed pose and camera focus from behind the defocus lens to evaluate reconstructed images.

1.b) Results

ChromaCorrect improves the conventional approach by means of color and contrast.

2) Simulated

In the second part, we evaluated our method with different prescriptions to model various refractive eye problems. Thus, all the images used in this part are evaluated in simulated LMS space.

2.a) Results

Here we compare outputs from five different refractive vision problems (myopia, hyperopia, hyperopic astigmatism, myopic astigmatism, and myopia with hyperopic astigmatism) for five sample input images. We provide simulated LMS space representations of target image, conventional method output, and our method. FLIP per-pixel difference along with it’s mean value (lower is better), SSIM and PSNR are provided to compare performance of methods. Our method shows better loss numbers for each image quailty metrics for each experiment in simulated LMS space. The contrast improvement by using our method against conventional method also can be obvserved perceptually.

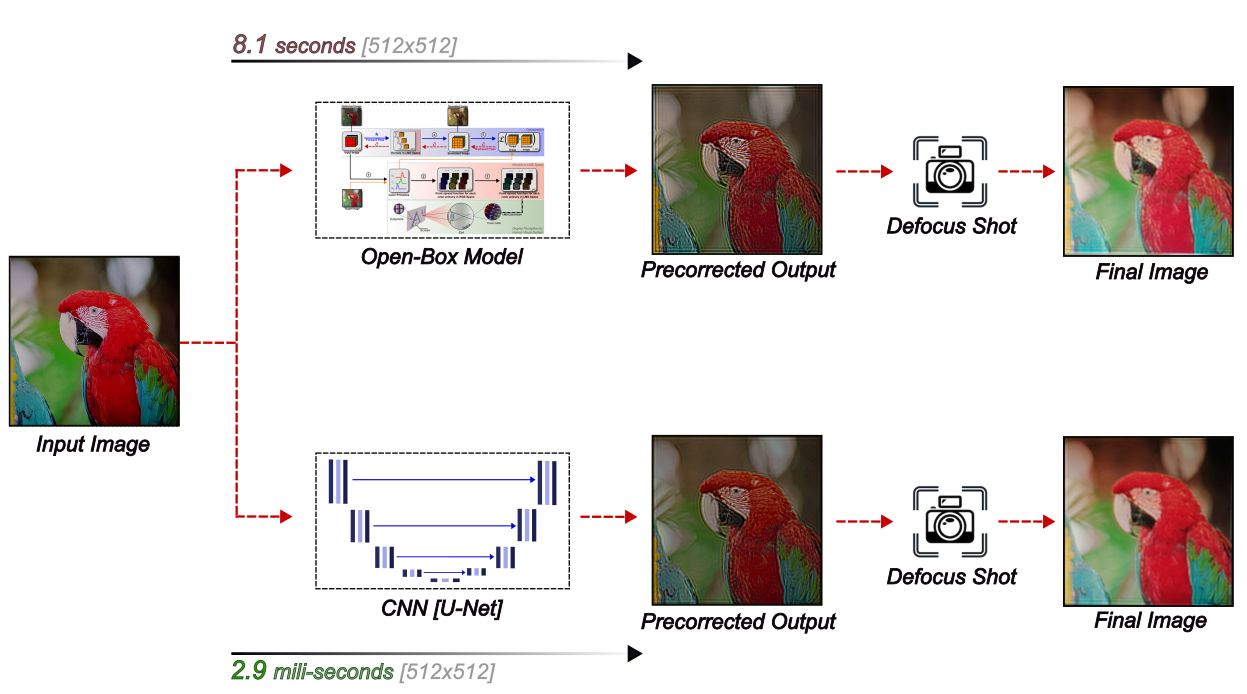

Learned Model (Neural ChromaCorrect)

We implement a semi-supervised deep learning model capable of reconstructing optimized images from their original RGB versions. We use a U-Net architecture for this purpose. Such a solution is more suitable than an iterative process for achieving real-time applications.

The learned model significantly reduces image generation time, with an average of 2.9 milliseconds per corrected image compared to the original method’s 8.127 seconds, a speed increase of approximately 2800 times

Citation

@article{guzel2023prescription,

title = {ChromaCorrect: Prescription Correction in Virtual Reality Headsets through Perceptual Guidance},

author = {Güzel, Ahmet and Beyazian, Jeanne and Chakravarthula, Praneeth and Akşit, Kaan},

journal = {Biomedical Optics Express},

volume = {2166-2180},

month = apr,

year = {2023},

language = {en},

video = {https://www.youtube.com/watch?v=fjexa7ga-tQ},

note = {\textbf{\textcolor{red}{Invited to Optica's Color and Data blast 2023}}},

doi = {https://doi.org/10.1364/BOE.485776},

}